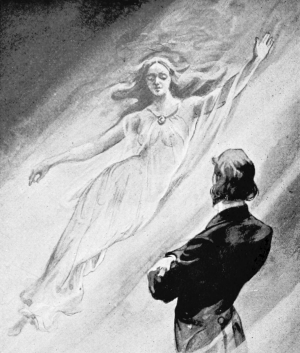

Solipsism, the belief that you are the only thing that really exists (everything else is dreamed or imagined by you), is generally regarded as obviously false; indeed, I’ve got it on a list somewhere here as one of the conclusions that tell you pretty clearly that your reasoning went wrong somewhere along the way; a sort of reductio ad absurdum. There don’t seem to be any solipsists, (except perhaps those who believe in the metempsychotic version) – but perhaps there are really plenty of them and they just don’t bother telling the rest of us about their belief, since in their eyes we don’t exist.

Solipsism, the belief that you are the only thing that really exists (everything else is dreamed or imagined by you), is generally regarded as obviously false; indeed, I’ve got it on a list somewhere here as one of the conclusions that tell you pretty clearly that your reasoning went wrong somewhere along the way; a sort of reductio ad absurdum. There don’t seem to be any solipsists, (except perhaps those who believe in the metempsychotic version) – but perhaps there are really plenty of them and they just don’t bother telling the rest of us about their belief, since in their eyes we don’t exist.

Still, there are some arguments for solipsism, especially its splendid parsimony. William of Occam advised us to use as few angels as possible in our cosmology, or more generally not to multiply entities unnecessarily. Solipsism reduces our ontological demand to a single entity, so if parsimony is important it leads the field. Or does it? Apart from oneself, the rest of the cosmos, according to solipsists, is merely smoke and mirrors; but smoke takes some arranging and mirrors don’t come cheap. In order for oneself to imagine all this complex universe, one’s own mind must be pretty packed with stuff, so the reduction in the external world is paid for by an increase in the internal one, and it becomes a tricky metaphysical question as to whether deeming the entire cosmos to be mental in nature actually reduces one’s ontological commitment or not.

Curiously enough, there is a relatively new argument for solipsism which runs broadly parallel to this discussion, derived from physics and particularly from the statistics of entropy. The second law of thermodynamics tells us that entropy increases over time; given that, it’s arguably kind of odd that we have a universe with non-maximal entropy in the first place. One possibility, put forward with several other ideas by Ludwig Boltzmann in 1896, is that the second law is merely a matter of probability. While entropy generally tends to increase, it may at times go the other way simply by chance. Although our observed galaxy is highly improbable, therefore, if you wait long enough it can occur just by chance as a pocket of low entropy arising from chance fluctuations in a vastly larger and older universe (really old; it would have to be hugely older than we currently believe the cosmos to be) whose normal state is close to maximal entropy.

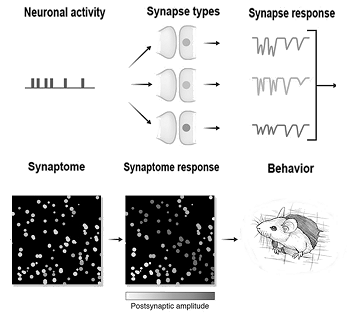

One problem with this is that while the occurrence of our galaxy by chance is possible, it’s much more likely that a single brain in the state required for it to be having one’s current experiences should arise from random fluctuations. In a Boltzmannic universe, there will be many, many more ‘Boltzmann brains’ like this than there will be complete galaxies like ours. Such brains cannot tell whether the universe they seem to perceive is real or merely a function of their random brain states; statistically, therefore, it is overwhelmingly likely that one is in fact a Boltzmann brain.

To me the relatively low probability demands of the Boltzmann brain, compared with those of a full universe, interestingly resemble the claimed low ontological requirement of the solipsism hypothesis, and there is another parallel. Both hypotheses are mainly used as leverage in reductio arguments; because this conclusion is absurd, something must be wrong with the assumptions or the reasoning that got us here. So if your proposed cosmology gives rise to the kind of universe where Boltzmann brains crop up ‘regularly’, that’s a large hit against your theory.

Usually these arguments, both in relation to solipsism and Boltzmann brains, simply rest on incredulity. It’s held to be just obvious that these things are false. And indeed it is obvious, but at a philosophical level, that won’t really do; the fact that something seems nuts is not enough, because nutty ideas have proven true in the past. For the formal logical application of reductio, we actually require the absurd conclusion to be self-contradictory; not just silly, but logically untenable.

Last year, Sean Carroll came up with an argument designed to beef up the case against Boltzmann brains in just the kind of way that seems to be required; he contends that theories that produce them cannot simultaneously be true and justifiably believed. Do we really need such ‘fancy’ arguments? Some apparently think not. If mere common sense is not enough, we can appeal to observation. A Boltzmann brain is a local, temporary thing, so we ought to be able to discover whether we are one simply by observing very distant phenomena or simply waiting for the current brain states to fall apart and dissolve. Indeed, the fact that we can remember a long and complex history is in itself evidence against our being Boltzmanns.

But appeals to empirical evidence cannot really do the job; there are several ways around them. First, we need not restrict ourselves literally to a naked brain; even if we surround it with enough structured world to keep the illusion going for a bit, our setup is still vastly more likely than a whole galaxy or universe. Second, time is no help because all our minds can really access is the current moment; our memories might easily be false and we might only be here for a moment. Third, most people would agree that we don’t necessarily need a biological brain to support consciousness; we could be some kind of conscious machine supplied with a kind of recording of our ‘experiences’. The requirement for such a machine could easily be less than for the disconnected biological brain.

So what is Carroll’s argument? He maintains that the idea of Boltzmann brains is cognitively unstable. If we really are such a brain, or some similar entity, we have no reason to think that the external world is anything like what we think it is. But all our ideas about entropy and the universe come from the very observations that those ideas now apparently undermine. We don’t quite have a contradiction, but we have an idea that removes the reasons we had for believing in it. We may not strictly be able to prove such ideas wrong, but it seems reasonable, methodologically at least, to avoid them.

One problem is those pesky arguments about solipsism. We may no longer be able to rely on the arguments about entropy in the cosmos, but can’t we borrow Occam’s Razor and point out that a cosmos that contains a single Boltzmann brain is ontologically far less demanding than a whole universe? Perhaps the Boltzmann arguments provide a neat physics counterpart for a philosophical case that ultimately rests on parsimony?

In the end, we can’t exactly prove solipsism false; but we can perhaps do something loosely akin to Carroll’s manoeuvre by asking: so what if it’s true? Can we ignore the apparent world? If we are indeed the only entity, what should we do about it, either practically or in terms of our beliefs? If solipsism is true, we cannot learn anything about the external world because it’s not there, just as in Carroll’s scenario we can’t learn about the actual world because all our perceptions and memories are systematically false. We might as well get on with investigating what we can investigate, or what seems to be true.