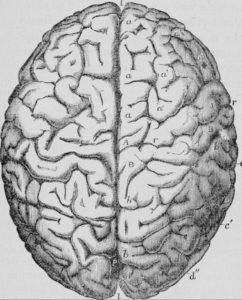

A whole set of interesting articles from IEEE Spectrum explore the question of whether AI can and should copy the human brain more. Of course, so-called neural networks were originally inspired by the way the brain works, but they represent a drastic simplification of a partial understanding. In fact, they are so unlike real neurons it’s really rather remarkable that they turn out to perform useful processes at all. Karlheinz Meier provides a useful review of the development of neuromorphic computing, up to contemporary chips with impressive performance.

A whole set of interesting articles from IEEE Spectrum explore the question of whether AI can and should copy the human brain more. Of course, so-called neural networks were originally inspired by the way the brain works, but they represent a drastic simplification of a partial understanding. In fact, they are so unlike real neurons it’s really rather remarkable that they turn out to perform useful processes at all. Karlheinz Meier provides a useful review of the development of neuromorphic computing, up to contemporary chips with impressive performance.

Jeff Hawkins suggests the brain is better in three ways. First, it learns by rewiring; by growing new synapses. This confers three benefits: learning that is fast, incremental, and continuous. The brain does not need to do lengthy retraining to learn new things. Remarkably, he says a single neurons can do substantial pieces of pattern recognition and acquire ‘knowledge’ of several patterns without them interfering with each other.

The second way in which the brain is better is that it uses sparse distributed representations; a particular idea such as ‘cat’ can be represented by a large number of neurons, with only a small percentage needing to be active at any one time. This makes the system robust in respect of noise and damage, but because some of the ‘cat’ neurons may play roles in the representation of other animals and other entities, it also makes it quick and efficient at recognising similarities and dealing with vague ideas (an animal in the bush which may or may not be a cat).

The third thing the brain does better, according to Hawkins, is sensorimotor integration. He makes the interesting claim that the brain effectively does this all over, as part of basic ordinary activity, not as a specialised central function. Instead of one big 3D model of the world, we have what amounts to little ones everywhere. This is interesting partly because it is, prima facie, so implausible. Doing your modelling a hundred or a million times over is going to use up a lot of energy and ‘processing power’, and it raises the obvious risk of inconsistency between models. But Hawkin’s says he has a detailed theory of how it works and you’d have to be bold to dismiss his claim.

There are several other articles, all worth a look. Actually there are several different reasons we might want to imitate the brain. We might want computers that can interface with humans better because, in part, they work in similar ways. We might want to understand the brain better and be able to test our understanding; an ability that might have real benefits for treating brain disease and injury, and to some degree make up for the ethical limitations on the experiments we can perform on humans. The main focus here, though, is on learning how to do those things the brain does so well, but which still cannot yet be done efficiently, or in some cases at all, by computers.

As a strategy, copying the brain has several drawbacks. First, we still don’t understand the brain well enough. Things have moved on greatly in recent years, but in some ways that just shows how limited our understanding was to begin with. There’s a significant danger that by imitating the brain without understanding, we end up reproducing features that are functionally irrelevant; features the brain has for chance evolutionary reasons. Do we need a brain divided into two halves, as those of vertebrates generally are, or is that unimportant? Second, one thing we do know is that the brain is extraordinarily complex and finely structured. We are never going to reproduce all that in full detail – but perhaps it doesn’t matter; we’ve never replicated the exquisite engineering of feather technology either, but it didn’t stop us doing flight or understanding birds.

I think the challenge of understanding the brain is unique, but trying to copy it is probably an increasingly productive strategy.