Marcin Milkowski has produced a short survey of arguments against computationalism; his aim is in fact to show that they all fail and that computationalism is likely to be both true and non-trivial. The treatment of each argument is very brief; the paper could easily be expanded into a substantial book. But it’s handy to have a comprehensive list, and he does seem to have done a decent job of covering the ground.

Marcin Milkowski has produced a short survey of arguments against computationalism; his aim is in fact to show that they all fail and that computationalism is likely to be both true and non-trivial. The treatment of each argument is very brief; the paper could easily be expanded into a substantial book. But it’s handy to have a comprehensive list, and he does seem to have done a decent job of covering the ground.

There are a couple of weaknesses in his strategy. One is just the point that defeating arguments against your position does not in itself establish that your position is correct. But in addition he may need to do more than he thinks. He says computationalism is the belief ‘that the brain is a kind of information-processing mechanism, and that information-processing is necessary for cognition’. But I think some would accept that as true in at least some senses while denying that information-processing is sufficient for consciousness, or characteristic of consciousness. There’s really no denying that the brain does computation, at least for some readings of ‘computation’ or some of the time. Indeed, computation is an invention of the human mind and arguably does not exist without it. But that does not make it essential. We can register numbers with our fingers, but while that does mean a hand is a digital calculator, digital calculation isn’t really what hands do, and if we’re looking for the secret of manipulation we need to look elsewhere.

Be that as it may, a review of the arguments is well worthwhile. The first objection is that computationalism is essentially a metaphor; Milkowski rules this out by definition, specifying that his claim is about literal computation. The second objection is that nothing in the brain seems to match the computational distinction between software and hardware. Rather than look for equivalents, Milkowski takes this one on the nose rather, arguing that we can have computation without distinguishing software and hardware. To my mind that concedes quite a large gulf separating brain activity from what we normally think of as computation.

No concessions are needed to dismiss the idea that computers merely crunch numbers; on any reasonable interpretation they do other things too by means of numbers, so this doesn’t rule out their being able to do cognition. More sophisticated is the argument that computers are strictly speaking abstract entities. I suppose we could put the case by saying that real computers have computerhood in the light of their resemblance to Turing machines, but Turing machines can only be approximated in reality because they have infinite tape and and move between strictly discrete states, etc. Milkowski is impatient with this objection – real brains could be like real computers, which obviously exist – but reserves the question of whether computer symbols mean anything. Swatting aside the objection that computers are not biological, it’s this interesting point about meaning that Milkowski tackles next.

He approaches the issue via the Chinese Room thought experiment and the ‘symbol grounding problem’. Symbols mean things because we interpret them that way, but computers only deal with formal, syntactic properties of data; how do we bridge the gap? Milkowski does not abandon hope that someone will naturalise meaning effectively, and mentions the theories of Millikan and Dretske. But in the meantime, he seems to feel we can accommodate some extra function to deal with meaning without having to give up the idea that cognition is essentially computational. That seems too big a concession to me, but if Milkowski set out his thinking in more depth it might perhaps be more appealing than it seems on brief acquaintance. Milkowski dismisses as a red herring Robert Epstein’s argument from the inability of the human mind to remember what’s on a dollar bill accurately (the way a computational mind would).

The next objection, derived from Gibson and Chemero, apparently says that people do not process information, they merely pick it up. This is not an argument I’m familiar with, so I might be doing it an injustice, but Milkowski’s rejection seems sensible; only on some special reading of ‘processing’ would it seem likely that people don’t process information.

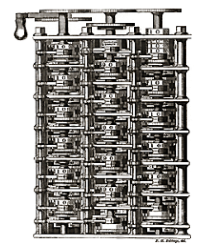

Now we come to the argument that consciousness is not computational; that computation is just the wrong sort of process to produce consciousness. Milkowski traces it back to Leibniz’s famous mill argument; the moving parts of a machine can never produce anything like experience. Perhaps we could put in the same camp Brentano’s incredulity and modern mysterianism; Milkowski mentions Searle’s assertion that consciousness can only arise from biological properties, not yet understood. Milkowski complains that if accepted, this sort of objection seems to bar the way to any reductive explanation (some of his opponents would readily bite that bullet).

Next up is an objection that computer models ignore time; this seems again to refer to the discrete states of Turing machines, and Milkowski dismisses it similarly. Next comes the objection that brains are not digital. There is in fact quite a lot that could be said on either side here, but Milkowski merely argues that a computer need not be digital. This is true, but it’s another concession; his vision of brain computationalism now seems to be of analogue computers with no software; I don’t think that’s how most people read the claim that ‘the brain is a computer’. I think Milkowski is more in tune with most computationalists in his attitude to arguments of the form ‘computers will never be able to x’ where x has been things like playing chess. Historically these arguments have not fared well.

Can only people see the truth? This is how Milkowski describes the formal argument of Roger Penrose that only human beings can transcend the limitations which every formal system must have, seeing the truth of premises that cannot be proved within the system. Milkowski invokes arguments about whether this transcendent understanding can be non-contradictory and certain, but at this level of brevity the arguments can really only be gestured at.

The objection Milkowski gives most credit to is the claimed impossibility of formalising common sense. It is at least very difficult, he concedes, but we seem to be getting somewhere. The objection from common sense is a particular case of a more general one which I think is strong; formal processes like computation are not able to deal with the indefinite realms that reality presents. It isn’t just the Frame Problem; computation also fails with the indefinite ambiguity of meaning (the same problem identified for translation by Quine); whereas human communication actually exploits the polyvalence of meaning through the pragmatics of normal discourse, rich in Gricean implicatures.

Finally Milkowski deals with two radical arguments. The first says that everything is a computer; that would make computationalism true, but trivial. Well, says Milkowski, there’s computation and computation; the radical claim would make even normal computers trivial, which we surely don’t want. The other radical case is that nothing is really a computer, or rather that whether anything is a computer is simply a matter of interpretation. Again this seems too destructive and too sweeping. If it’s all a matter of interpretation, says Milkowski, why update your computer? Just interpret your trusty Windows Vista machine as actually running Windows 10 – or macOS, why not?