Alfredo Perreira Jnr has kindly let me see an ambitious paper he and Leonardo Ferreira Almada have produced: Conceptual Spaces and Consciousness: Integrating Cognitive and Affective Processes (forthcoming in the International Journal of Machine Consciousness).

Alfredo Perreira Jnr has kindly let me see an ambitious paper he and Leonardo Ferreira Almada have produced: Conceptual Spaces and Consciousness: Integrating Cognitive and Affective Processes (forthcoming in the International Journal of Machine Consciousness).

The unifying theme of the paper is indeed the integration of emotional and neutral cognitive processes, but it falls into two distinct parts.

The first, drawing on the work of Peter Gärdenfors, sets out the heady vision of a universal state space of consciousness. Such a state space, as I understand it, would be an imaginary space constructed from a large number of dimensions each corresponding to one of the continuously variable aspects of consciousness. In principle this would provide a model of all possible states of consciousness, such that anyone’s life experience would form a path through some area of the space.

The challenges involved in actually populating such a theoretical construct with real data are naturally daunting. Perreira and Almada suggest that it could be approached on the basis of reported states of consciousness. An immediate problem is that qualia, the essence of subjective experience, are widely considered to be unreportable: Perreira and Almada meet this head on by adopting the heterophenomenology of Daniel Dennett: this approach (which I think implies scepticism about ineffable qualia) is based on studying phenomenal experience indirectly, through what subjects say about their own: the third-person perspective. Perreira and Almada note that Dennett adopted this stance mainly as a means of refuting first-person approaches, but I’m sure he would (or will) be delighted to hear of its being adopted as the explicit basis of serious research. It’s implicit in this approach that we’re dealing with states that are capable of ‘inter-subjective validation’, that is, that they’re states which are accessible to all conscious entities. This rules out objections on the grounds that, say, Andy having experience X is not the same as Bill having experience X, though in so doing it may appreciably impoverish the scope of the exercise. It could be that the set of experiences common to all conscious beings is actually a significantly restricted sub-set of the whole realm of conscious experience. For that matter, can we afford to ignore the unconscious or the subconscious? At times the borderline between conscious states and their near relations may be blurry.

I think two other worries are worth a mention. The state space model suggests that all trajectories are equally valid, but it seems unlikely that this is the case here. Consciousness is a stream, both emotionally and cognitively: certain kinds of state naturally follow other kinds of state. In fact, it doesn’t seem too much to claim that some states refer to previous states: we can’t repent our anger intelligibly without having first been angry. The business of reference, moreover, is a problem in itself. We’ve already excluded the possibility of Andy’s anger being different from Bill’s, but we can also be in the same state of anger about different things, which seems a material difference. Me being angry about my tax return is not really the same state of consciousness as me being angry about receiving a parking ticket, though in principle the anger itself could be identical. Because, thanks to the miracle of intentionality, we can be angry about anything, including imaginary and logically absurd entities, this is a large problem. Either we exclude these intentional factors – and put up with a further substantial impoverishment of our state space – or the size of our state space balloons out infinitely in all directions.

The practical problems are not necessarily fatal, of course: it’s not as if Perreira and Almada were actually proposing a fully-documented description of the universal state space. What they do suggest is that if we assume another state space (wow!) corresponding to all the possible biophysical states of the brain, we can then hypothesise a mapping of points in one space to points in the other, which would give us the prize of a reduction of conscious experience to physical brain function. Now I think a biophysical state space of the brain faces formidable difficulties of its own: for one thing we really don’t know exactly which biophysical features of the brain are functionally relevant; for another different brains are not wired the same way – and of course the sheer complexity of the thing is mind-boggling. The biophysical state space of a single neuron is a non-trivial proposition.

However, at a purely theoretical level, this is a nice rigorous statement of what the much-sought Neural Correlates of Consciousness might actually be. If we merely claim that there is a mapping between the two state spaces, we have a sort of rock-bottom version of NCCs, a possible statement of the minimum claim. We would expect there to be some more general correspondences and matches between regions and trajectories in the two spaces – though I think it would be optimistic to expect these to be simple (and constructing the two state spaces and then observing the regularities would be a remarkably long way round to discovering correspondences between brain and mind activity). Still, the fact that these pesky NCCs turn out to be more abstract and problematic than we might have hoped is in itself a conclusion worthy of note.

All these heroic speculations are in any case just the hors d’oeuvres for Perreira and Almada: the state space of consciousness would have to represent emotional affect as well as rational cognition: how would that work? They proceed to review a series of proposals for integration emotion and cognition. Damasio’s Somatic Marker Hypothesis, which has emotional affect deriving from states of the body is favourably considered, though criticised for elements of circularity. The alternative view that emotions come first avoids such problems but is ciriticised for not squaring with empirical evidence. Perreira and Almada suggest a better third alternative might be based on mapping the complex inter-relations of cognition and effect, and give a friendly mention to the oft-quoted Global Workspace theory of Bernard Baars. Now we can begin to see where the discussion is going, but first the paper brings in a new element.

This is a discussion of what actually makes mental states conscious – embodiment, higher order states, or what? Perreira and Almada look at a number of proposals, including Arnold Trehub’s retinoid system and Tononi’s concept of Phi, a measure of integrated information. Briefly, they conclude that something beyond all these approaches is needed, and something which puts the integration of affect and cognition at the heart of the system.

Now we come to the second major part of the paper, where Perreira and Almada introduce their own proposal: step forward the astrocytes.

Astrocytes are the most common form of glial cell, which are ‘the other brain cells’. Neurons have generally had all the glory in the past; historically it was assumed that the role of glia was essentially as packing for the neurons – in fact ‘glia’ is the Greek word for ‘glue’. In recent years, however, it has become gradually clearer that glia, and astrocytes in particular, are more important than that. They form a second network of their own, across which ‘calcium waves’ are propagated. I think it would be true to say that the standard view now sees astrocytes as important in supporting and modulating neural function, while still reserving the main functional signficance for all those showy synaptic fireworks that neurons engage in. Perreira and Almada want to give the neural and glial networks something like parity of esteem. The proposal, in essence, is that plain cognition is done by the neurons, while feelings are carried by large astrocytic calcium waves: only when the two come together does consciousness arise. Consciousness is the “astroglial integration of information contents carried by neurons”.

What about that? It’s a bold and novel hypothesis (something we certainly need); it’s at least superficially plausible and has a definite intuitive appeal. But there are objections. First, there seem to be other established candidates for the role of feeling-provider. We know that certain parts of the brain are required for certain kinds of affect – the role of the amygdala in producing ‘fear and loathing’ (or perhaps we should say ‘reasonable distrust and aversion’) has been much discussed. Certain emotions are almost proverbially (which of course is not to say accurately) associated with hormones and the action of certain glands. This needs to be addressed, but I don’t think Perreira and Almada would have too much difficulty in setting out a picture which accomodated these other systems.

More difficult I think are two more fundamental questions. Why would astrocytic calcium waves cause, or amount to, feelings? And why would those feelings, when associated with cognitive information, constitute consciousness? Damasio’s and other theories can offer a clearer answer on the first point because it’s at least plausible that emotional states can be reduced to the pounding heart, the watering eyes, the churning stomach: calcium waves rippling across the brain somehow don’t seem as obviously relevant. And then, is it really the case that all conscious states have emotional affect? Perreira and Almada suggest that if neurons alone are involved (or astrocytes alone) all you get is proto-consciousness: but intuitively there doesn’t seem anything difficult about completely dispassionate but fully conscious thought.

One strength of the theory is that it seems likely to be more open to direct scientific testing than most theories of consciousness: a few solid experiments would probably relegate the kind of objection I’ve mentioned to secondary status. So perhaps we’ll see…

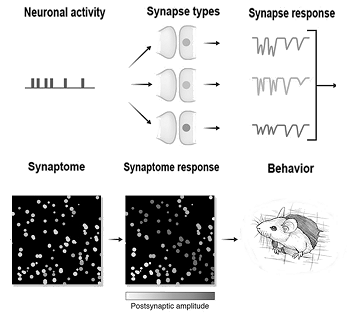

A remarkable paper from a team at Edinburgh explains how every synapse in a mouse brain was mapped recently, a really amazing achievement. The resulting maps are available here.

A remarkable paper from a team at Edinburgh explains how every synapse in a mouse brain was mapped recently, a really amazing achievement. The resulting maps are available here.

An interesting but somewhat problematic

An interesting but somewhat problematic  Can we, one day, understand how the neurology of the brain leads to conscious minds, or will that remain impossible?

Can we, one day, understand how the neurology of the brain leads to conscious minds, or will that remain impossible? Alfredo Perreira Jnr has kindly let me see an ambitious paper he and Leonardo Ferreira Almada have produced: Conceptual Spaces and Consciousness: Integrating Cognitive and Affective Processes (forthcoming in the

Alfredo Perreira Jnr has kindly let me see an ambitious paper he and Leonardo Ferreira Almada have produced: Conceptual Spaces and Consciousness: Integrating Cognitive and Affective Processes (forthcoming in the