Charles Babbage was not the only Victorian to devise a thinking machine.

Charles Babbage was not the only Victorian to devise a thinking machine.

He is, of course, considered the father, or perhaps the grandfather, of digital computing. He devised two remarkable calculating machines; the Difference Engine was meant to produce error-free mathematical tables for navigation or other uses; the Analytical Engine, an extraordinary leap of the imagination, would have been the first true general-purpose computer. Although Babbage failed to complete the building of the first, and the second never got beyond the conceptual stage, his achievement is rightly regarded as a landmark, and the Analytical Engine routines published by Lady Lovelace in 1843 with a translation of Menabrea’s description of the Engine, have gained her recognition as the world’s first computer programmer.

The digital computer, alas, went no further until Turing a hundred years later; but in 1851 Alfred Smee published The Process of Thought adapted to Words and Language together with a description of the Relational and Differential Machines – two more designs for cognitive mechanisms.

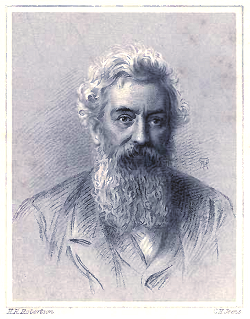

Smee held the unusual post of Surgeon to the Bank of England – in practice he acted as a general scientific and technical adviser. His father had been Chief Accountant to the Bank and little Alfred had literally grown up in the Bank, living inside its City complex. Apparently, once the Bank’s doors had shut for the night, the family rarely went to the trouble of getting them unlocked again to venture out; it must have been a strangely cloistered life. Like Babbage and other Victorians involved in London’s lively intellectual life, Smee took an interest in a wide range of topics in science and engineering, with his work in electro-metallurgy leading to the invention of a successful battery; he was a leading ophthalmologist and among many other projects he also wrote a popular book about the garden he created in Wallington, south of London – perhaps compensating for his stonily citified childhood?

Smee was a Fellow of the Royal Society, as was Babbage, and the two men were certainly acquainted (Babbage, a sociable man, knew everyone anyway and was on friendly terms with all the leading scientists of the day; he even managed to get Darwin, who hated socialising and suffered persistent stomach problems, out to some of his parties). However, it doesn’t seem the two ever discussed computing, and Smee’s book never mentions Babbage.

That might be in part because Smee came at the idea of a robot mind from a different, biological angle. As a surgeon he was interested in the nervous system and was a proponent of ‘electro-biology’, advocating the modern view that the mind depends on the activity of the brain. At public lectures he exhibited his own ‘injections’ of the brain, revealing the complexity of its structure; but Golgi’s groundbreaking methods of staining neural tissue were still in the future, and Smee therefore knew nothing about neurons.

Smee nevertheless had a relatively modern conception of the nervous system. He conducted many experiments himself (he used so many stray cats that a local lady was moved to write him a letter warning him to keep clear of hers) and convinced himself that photovoltaic effects in the eye generated small currents which were transmitted bio-electrically along the nerves and processed in the brain. Activity in particular combinations of ‘nervous fibrils’ gave rise to awareness of particular objects. The gist is perhaps conveyed in the definition of consciousness he offered in an earlier work, Principles of the Human Mind Deuced from Physical Laws:

When an image is produced by an action upon the external senses, the actions on the organs of sense concur with the actions in the brain; and the image is then a Reality.

When an image occurs to the mind without a corresponding simultaneous action of the body, it is called a Thought.

The power to distinguish between a thought and a reality, is called Consciousness.

This is not very different in broad terms from a lot of current thinking.

In The Process of Thought Smee takes much of this for granted and moves on to consider how the brain deals with language. The key idea is that the brain encodes things into a pyramidal classification hierarchy. Smee begins with an analysis of grammar, faintly Chomskyan in spirit if not in content or level of innovation. He then moves on rapidly to the construction of words. If his pyramidal structure is symbolically populated with the alphabet different combinations of nervous activity will trigger different combinations of letters and so produce words and sentences. This seems to miss out some essential linguistic level, leaving the impression that all language is spelled out alphabetically, which can hardly be what Smee believed.

When not dealing specifically with language the letters in Smee’s system correspond to qualities and this pyramid stands for a universal categorisation of things in which any object can be represented as a combination of properties. (This rather recalls Bishop Wilkins’ proposed universal language, in which each successive letter of each noun identifies a position in an hierarchical classification, so that the name is an encoded description of the thing named.)

At least, I think that’s the way it works. The book goes on to give an account of induction, deduction, and the laws of thought; alas, Smee seems unaware of the problem described by Hume and does not address it. Instead, in essence, he just describes the processes; although he frames the discussion in terms of his pyramidal classification his account of induction (he suggests six different kinds) comes down to saying that if we observe two characteristics constantly together we assume a link. Why do we do that – and why is it we actually often don’t? Worse than that, he mentions simple arithmetic (one plus one equals two, two times two is four) and says:

These instances are so familiar we are apt to forget that they are inductions…

Alas, they’re not inductions. (You could arrive at them by induction, but no-one ever actually does and our belief in them does not rest on induction.)

I’m afraid Smee’s laws of thought also stand on a false premise; he says that the number of ideas denoted by symbols is finite, though too large for a man to comprehend. This is false. He might have been prompted to avoid the error if he had used numbers instead of letters for his pyramid – because each integer represents an idea; the list of integers goes on forever, yet our numbering system provides a unique symbol for every one? So neither the list of ideas nor the list of symbols can be finite. Of course that barely scratches the surface of the infinitude of ideas and symbols, but it helps suggest just how unmanageable a categorisation of every possible idea really is.

But now we come to the machines designed to implement these processes. Smee believed that his pyramidal structure could be implemented in a hinged physical mechanism where opening would mean the presence or existence of the entity or quality and closing would mean its absence. One of these structures provides the Relational Machine. It can test membership of categories, or the possession of a particular attribute, and can encode an assertion, allowing us to test consistency of that assertion with a new datum. I have to confess to having only a vague understanding of how this would really work. He allows for partial closing and I think the idea is that something like predicate calculus could be worked out this way. He says at one point that arithmetic could be done with this mechanism and that anyone who understands logarithms will readily see how; I’m afraid I can only guess what he had in mind.

It isn’t necessary to have two Relational Machines to deal with multiple assertions because we can take them in sequence; however the Differential Machine provides the capacity to compare directly, so that we can load into one side all the laws and principles that should guide a case while uploading the facts into the other.

Smee had a number of different ideas about how the machines could be implemented, and says he had a number of part-completed prototypes of partial examples on his workbench. Unlike Babbage’s designs, his were never meant to be capable of full realisation, though; although he thinks it is finite he says the Relational Machine would cover London and the mechanical stresses would destroy it immediately if it were ever used; moreover, using it to crank out elementary deductions would be so slow and tedious people would soon revert to using their wonderfully compact and efficient brains instead. But partial examples will helpfully illustrate the process of thought and help eliminate mistakes and ‘quibbles’. Later chapters of the book explore things that can go wrong in legal cases, and describe a lot of the quibbles Smee presumably hopes his work might banish.

I think part of the reason Smee’s account isn’t clearer (to me, anyway) is that his ideas were never critiqued by colleagues and he never got near enough to a working prototype to experience the practical issues sufficiently. He must have been a somewhat lonely innovator in his lab in the Bank and in fairness the general modernity of his outlook makes us forget how far ahead of his time he was. When he published his description of his machines, Wundt, generally regarded as the founder of scientific psychology, was still an undergraduate. To a first approximation, nobody knew anything about psychology or neurology. Logic was still essentially in the long Aristotelian twilight – and of course we know where computing stood. It is genuinely remarkable that Smee managed, over a hundred and fifty years ago, to achieve a proto-modern, if flawed, understanding of the brain and how it thinks. Optimists will think that shows how clever he was; pessimists will think it shows how little our basic conceptual thinking has been updated by the progress of cognitive science.