All I want for a Christmas is a new brain? There seems to have been quite a lot of discussion recently about the prospect of brain augmentation; adding in some extra computing power to the cognitive capacities we have already. Is this a good idea? I’m rather sceptical myself, but then I’m a bit of a Luddite in this area; I still don’t like the idea of controlling a computer with voice commands all that much.

All I want for a Christmas is a new brain? There seems to have been quite a lot of discussion recently about the prospect of brain augmentation; adding in some extra computing power to the cognitive capacities we have already. Is this a good idea? I’m rather sceptical myself, but then I’m a bit of a Luddite in this area; I still don’t like the idea of controlling a computer with voice commands all that much.

Hasn’t evolution has already optimised the brain in certain important respects? I think there may be some truth in that, but It doesn’t look as if evolution has done a perfect job. There are certainly one or two things about the human nervous system that look as if they could easily be improved. Think of the way our neural wiring crosses over from right to left for no particular reason. You could argue that although that serves no purpose it doesn’t do any real harm either, but what about the way our retinas are wired up from the front instead of the back, creating an entirely unnecessary blind spot where the bundle of nerves actually enters the eye – a blind spot which our brain then stops us seeing, so we don’t even know it’s there?

Nobody is proposing to fix those issues, of course, but aren’t there some obvious respects in which our brains could be improved by adding in some extra computational ability? Could we be more intelligent, perhaps? I think the definition of intelligence is controversial, but I’d say that if we could enhance our ability to recognise complex patterns quickly (which might be a big part of it) that would definitely be a bonus. Whether a chip could deliver that seems debatable at present.

Couldn’t our memories be improved? Human memory appears to have remarkable capacity, but retaining and recalling just those bits of information we need has always been an issue. Perhaps related, we have that annoying inability to hold more than a handful of items in our minds at once, a limitation that makes it impossible for us to evaluate complex disjunctions and implications, so that we can’t mentally follow a lot of branching possibilities very far. It certainly seems that computer records are in some respects sharper, more accurate, and easier to access than the normal human system (whatever the normal human system actually is). It would be great to remember any text at will, for example, or exactly what happened on any given date within our lives. Being able to recall faces and names with complete accuracy would be very helpful to some of us.

On top of that, couldn’t we improve our capacity for logic so that we stop being stumped by those problems humans seem so bad at, like the Wason test? Or if nothing else, couldn’t we just have the ability to work out any arithmetic problem instantly and flawlessly, the way any computer can do?

The key point here, I think, is integration. On the one hand we have a set of cognitive abilities that the human brain delivers. On the other, we have a different set delivered by computers. Can they be seamlessly integrated? The ideal augmentation would mean that, for example, if I need to multiply two seven-digit numbers I ‘just see’ the answer, the way I can just see that 3+1 is 4. If, on the contrary, I need to do something like ask in my head ‘what is 6397107 multiplied by 8341977?’ and then receive the answer spoken in an internal voice or displayed in an imagined visual image, there isn’t much advantage over using a calculator. In a similar way, we want our augmented memory or other capacity to just inform our thoughts directly, not be a capacity we can call up like an external facility.

So is seamless integration possible? I don’t think it’s possible to say for certain, but we seem to have achieved almost nothing to date. Attempts to plug into the brain so far have relied on setting up artificial linkages. Perhaps we detect a set of neurons that reliably fire when we think about tennis; then we can ask the subject to think about tennis when they want to signal ‘yes’, and detect the resulting activity. It sort of works, and might be of value for ‘locked in’ patients who can’t communicate any other way, but it’s very slow and clumsy otherwise – I don’t think we know for sure whether it even works long-term or whether, for example, the tennis linkage gradually degrades.

What we really want to do is plug directly into consciousness, but we have little idea of how. The brain does not modularise its conscious activity to suit us, and it may be that the only places we can effectively communicate with it are the places where it draws data together for its existing input and output devices. We might be able to feed images into early visual processing or take output from nerves that control muscles, for example. But if we’re reduced to that, how much better is that level of integration going to be than simply using our hands and eyes anyway? We can do a lot with those natural built-in interfaces ; simple reading and writing may well be the greatest artificial augmentation the brain can ever get. So although there may be some cool devices coming along at some stage, I don’t think we can look for godlike augmented minds any time soon.

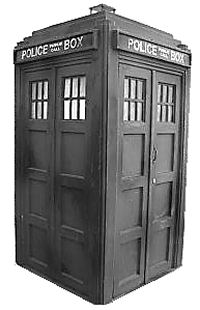

Incidentally, this problem of integration may be one good reason not to worry about robots taking over. If robots ever get human-style motivation, consciousness, and agency, the chances are that they will get them in broadly the way we get them. This suggests they will face the same integration problem that we do; seven-digit multiplication may be intrinsically no easier for them than it is for us. Yes, they will be able to access computers and use computation to help them, but you know, so can we. In fact we might be handier with that old keyboard than they are with their patched-together positronic brain-computer linkage.

Our conscious minds are driven by unconscious processes, much as it may seem otherwise, say David A. Oakley and Peter W. Halligan. A short version is

Our conscious minds are driven by unconscious processes, much as it may seem otherwise, say David A. Oakley and Peter W. Halligan. A short version is

Hugh Howey gives a bold, amusing, and hopelessly optimistic

Hugh Howey gives a bold, amusing, and hopelessly optimistic  Ned Block has produced a meaty

Ned Block has produced a meaty  Where is consciousness? It’s out there, apparently, not in here. There has been an interesting dialogue

Where is consciousness? It’s out there, apparently, not in here. There has been an interesting dialogue  Could the Universe

Could the Universe